Mogon is the short name of the Roman city name Mogontiacum, from which the current "Mainz" has emerged in the course of history.

MOGON NHR Süd-West

Since the end of 2021 the Johannes Gutenberg University Mainz (JGU) is part of the national high-performance computing consortium NHR Süd-West. It consists—aside from Mainz—of the University of Kaiserslautern-Landau (RPTU), the Goethe University Frankfurt, and the Saarland University.

On March 13th the new HPC-Cluster at the JGU was inaugurated. The high-performance computer MOGON NHR Süd-West is available for scientists throughout Germany for complex computing operations and the analysis of large amounts of data. With 500 compute nodes, 75.000 CPU-cores and a main memory of 186 TB, as well as a file server with 8.000 TB (8 Petabyte) data-intensive system and model simulations can be performed. Every node comes with two AMD processors (AMD EPYC 7713) that offer 64 cores each. Beyond that, 10 compute nodes are equipped with 4 A100 for specialized HPC workloads. The compute nodes are interconnected via HDR InfiniBand. The use of HDR InfiniBand, a particular network interface standard, offers an especially high transmission rate.

MOGON NHR Süd-West | HPC cluster specificationen

| 590 | COMPUTE NODES |

| 75.000 | CPU CORES |

| 40 | A100 TENSOR CORE GPUS |

| 186 TB | RAM |

| 8.000 TB | FILE SERVER |

| 100 GB/s | INFINIBAND-NETWORK |

| 2.8 PFLOPS | MAXIMUM PERFORMANCE |

AMD EPYC 7713 processor

| 64 | CORES |

| 2 GHZ | FREQUENCY |

| 256 MB | L3 CACHE |

| 2 | PER NODES |

Mogon 2-Cluster

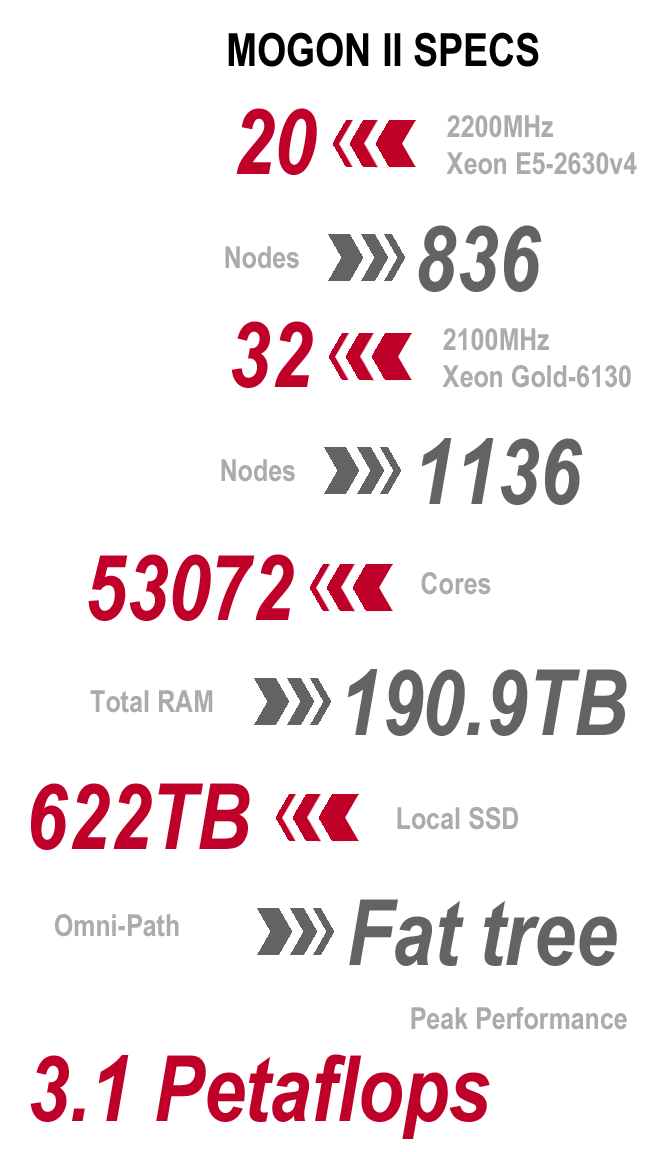

The ZDV's Mogon II cluster was acquired in 2016 and 2017. The system consists of 1,876 individual nodes, of which 822 nodes are each equipped with two 10-core Broadwell processors (Intel 2630v4) CPUs and 1,136 nodes each with two 16-core Skylake processors (Xeon Gold 6130) and equipped with OmniPath 50Gbits ( Fat-tree) are connected. In total this results in around 50,000 cores.

Of the total number of computers, 584 nodes are equipped with 64 GiB RAM (3.2 GiB / core), 732 with 96 GiB RAM (3.0 GiB / core), 168 nodes with 128 GiB RAM (6.4 GiB / core), 120 nodes with 192 GiB RAM (6.0 GiB / core), 40 nodes with 256 GiB RAM (12.8 GiB / core), 32 nodes with 384 GiB RAM (12.0 GiB / core), 20 nodes with 512 GiB RAM ( 25.6 GiB / core), 2 nodes with 1024 GiB RAM (51.2 GiB / core) and 2 nodes with 1536 GiB RAM (48.0 GiB / core), i.e. 5GiB / core on average.

Each node also has a 200GB or 400GB SSD (in the large nodes) for temporary files. At the time of installation, the Mogon II cluster was number 65 on the TOP500 and number 51 on the GREEN500. Mogon II is operated at the JGU by the Center for Data Processing (ZDV) and the Helmholtz Institute Mainz (HIM).

Mogon-Cluster

The Mogon cluster of the ZDV was acquired in 2012 and expanded in 2013 in a second stage to include GPU nodes. The system currently consists of 555 individual nodes, each equipped with four AMD CPUs. Each CPU has 16 cores, resulting in a total of 35,520 cores. Each core is clocked at 2.1 GHz. Of the total number of computers, 444 nodes are equipped with 128 GiB RAM (2 GiB / core), 96 nodes with 256 GiB RAM (4 GiB / core) and 15 nodes with 512 GiB RAM (8 GiB / core). Each node also provides 1.5 TB of local hard drive space for temporary files.

In addition to a small GPU training cluster, there are also 13 nodes with 4 GPUs (5-6GB per GPU) per node and 2 nodes with 4 XeonPhis per node. The GPU nodes and the two Phi nodes each have two Intel CPUs with 8 cores and 64GB each.

The user has 1 GBit Ethernet and QDR Infiniband available as a network. Infiniband networking is based on a "full" fat tree.

In addition, several private subclusters from different working groups are managed at the ZDV and made available to the respective users as queues.

HIM-Cluster

The clusters are private clusters of the Helmholtz Institute Mainz.Further information on research, funding programs and contact can be found here.

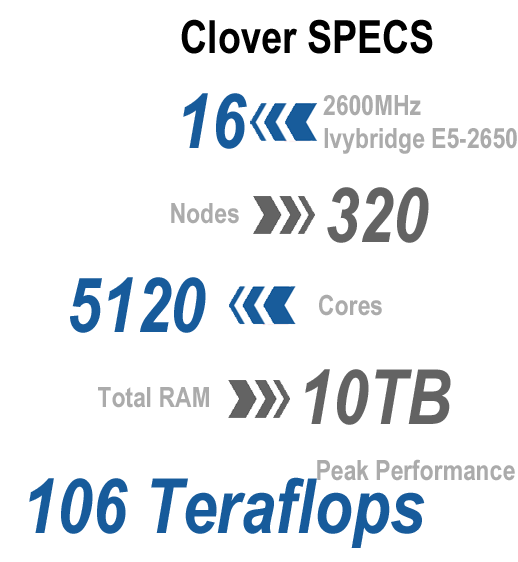

Clover started operating in 2014. It consisted of 320 nodes with 2 Intel Ivybridge processors each. Each CPU had 8 cores, so that the overall system had 5120 cores.The CPUs ran at 2.6 GHz.Each node had 32 GB of RAM (2 GB / core).

The nodes were connected to QDR Infiniband and had access to 200TB of central storage.

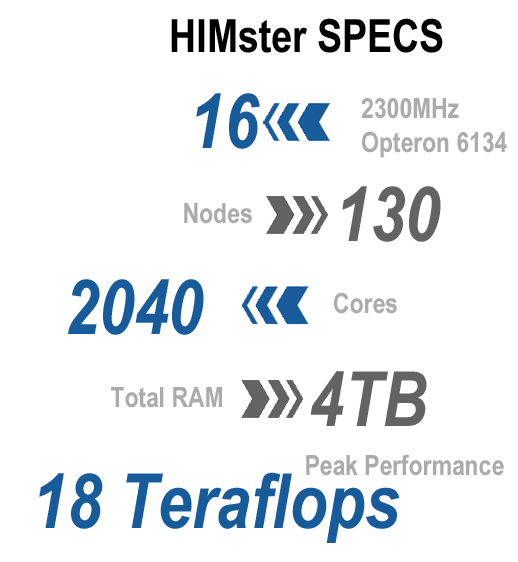

HIMster

HIMster had been calculating since 2011. It consisted of 130 nodes, each with 2 AMD Opteron processors.Each CPU had 8 cores, so that the entire system had 2080 cores.The CPUs were clocked at 2.3 GHz.Each node had 32 GB RAM (2 GB / core), 14 of the nodes had 64 GB RAM (4 GB / core).

The nodes were connected to QDR Infiniband and had access to 557TB central storage with Fraunhofer Filsesystem (fhGFS).